Can AI have a mind of its own? 6 Minute English | by 700 Eth | May, 2023 | Medium

Hello. This is 6 Minute English from BBC Learning English. I'm Sam. And I'm Neil. In the autumn of 2021, something strange happened at the Google headquarters in California's Silicon Valley. A software engineer called, Blake Lemoine, was working on the artificial intelligence project, ‘Language Models for Dialogue Applications', or LaMDA for short.

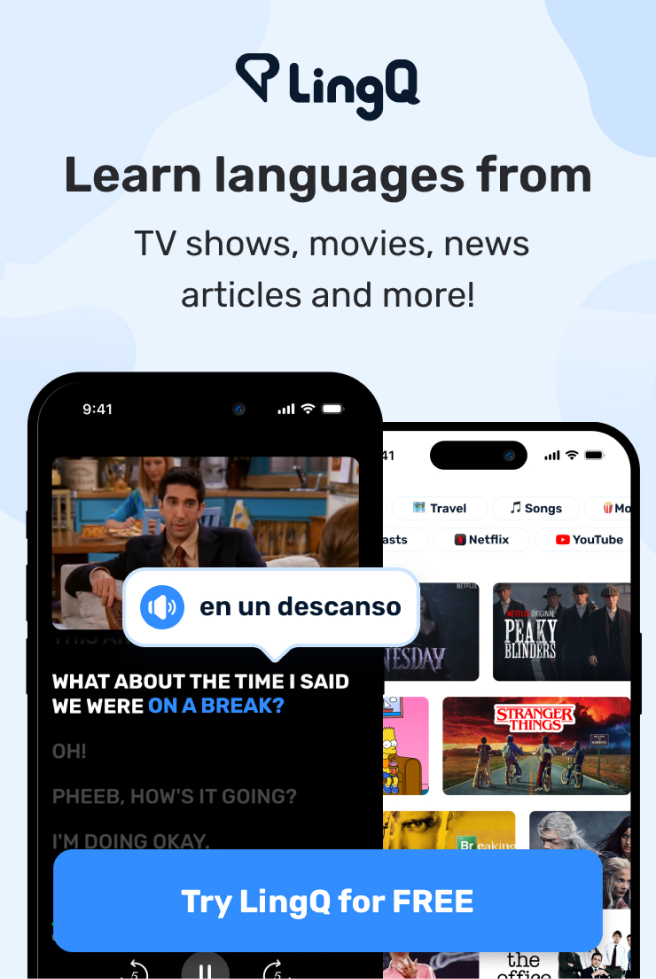

LaMDA is a chatbot — a computer programme designed to have conversations with humans over the internet. After months talking with LaMDA on topics ranging from movies to the meaning of life, Blake came to a surprising conclusion: the chatbot was an intelligent person with wishes and rights that should be respected. For Blake, LaMDA was a Google employee, not a machine.

He also called it his ‘friend'. Google quickly reassigned Blake from the project, announcing that his ideas were not supported by the evidence. But what exactly was going on? In this programme, we'll be discussing whether artificial intelligence is capable of consciousness. We'll hear from one expert who thinks AI is not as intelligent as we sometimes think, and as usual, we'll be learning some new vocabulary as well.

But before that, I have a question for you, Neil. What happened to Blake Lemoine is strangely similar to the 2013 Hollywood movie, Her, starring Joaquin Phoenix as a lonely writer who talks with his computer, voiced by Scarlett Johansson. But what happens at the end of the movie? Is it: a) the computer comes to life? b) the computer dreams about the writer? or, c) the writer falls in love with the computer? … c) the writer falls in love with the computer.

OK, Neil, I'll reveal the answer at the end of the programme. Although Hollywood is full of movies about robots coming to life, Emily Bender, a professor of linguistics and computing at the University of Washington, thinks AI isn't that smart. She thinks the words we use to talk about technology, phrases like ‘machine learning', give a false impression about what computers can and can't do.

Here is Professor Bender discussing another misleading phrase, ‘speech recognition', with BBC World Service programme, The Inquiry: If you talk about ‘automatic speech recognition', the term ‘recognition' suggests that there's something cognitive going on, where I think a better term would be automatic transcription.

That just describes the input-output relation, and not any theory or wishful thinking about what the computer is doing to be able to achieve that. Using words like ‘recognition' in relation to computers gives the idea that something cognitive is happening — something related to the mental processes of thinking, knowing, learning and understanding.

But thinking and knowing are human, not machine, activities. Professor Benders says that talking about them in connection with computers is wishful thinking — something which is unlikely to happen. The problem with using words in this way is that it reinforces what Professor Bender calls, technical bias — the assumption that the computer is always right.

When we encounter language that sounds natural, but is coming from a computer, humans can't help but imagine a mind behind the language, even when there isn't one. In other words, we anthropomorphise computers — we treat them as if they were human. Here's Professor Bender again, discussing this idea with Charmaine Cozier, presenter of BBC World Service's, the Inquiry.

So ‘ism' means system, ‘anthro' or ‘anthropo' means human, and ‘morph' means shape… And so this is a system that puts the shape of a human on something, and in this case the something is a computer. We anthropomorphise animals all the time, but we also anthropomorphise action figures, or dolls, or companies when we talk about companies having intentions and so on.

We very much are in the habit of seeing ourselves in the world around us. And while we're busy seeing ourselves by assigning human traits to things that are not, we risk being blindsided. The more fluent that text is, the more different topics it can converse on, the more chances there are to get taken in. If we treat computers as if they could think, we might get blindsided, or unpleasantly surprised.

Artificial intelligence works by finding patterns in massive amounts of data, so it can seem like we're talking with a human, instead of a machine doing data analysis. As a result, we get taken in — we're tricked or deceived into thinking we're dealing with a human, or with something intelligent. Powerful AI can make machines appear conscious, but even tech giants like Google are years away from building computers that can dream or fall in love.

Speaking of which, Sam, what was the answer to your question? I asked what happened in the 2013 movie, Her. Neil thought that the main character falls in love with his computer, which was the correct answer! OK. Right, it's time to recap the vocabulary we've learned from this programme about AI, including chatbots — computer programmes designed to interact with humans over the internet.

The adjective cognitive describes anything connected with the mental processes of knowing, learning and understanding. Wishful thinking means thinking that something which is very unlikely to happen might happen one day in the future. To anthropomorphise an object means to treat it as if it were human, even though it's not.

When you're blindsided, you're surprised in a negative way. And finally, to get taken in by someone means to be deceived or tricked by them. My computer tells me that our six minutes are up! Join us again soon, for now it's goodbye from us. Bye!